Building the Unix Spelling Corrector from Scratch - Part 1

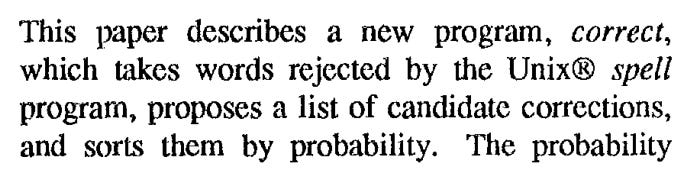

The paper “A Spelling Correction Program Based on a Noisy Channel Model” from 1990 explains the application of probability theory to solving the problem of implementing the Unix spell corrector program, appropriately named, correct.

My purpose in going through this paper is to develop a bottom up understanding in the area of spell correctors - which act as a stepping stone to understand n-gram models (which are based on Markov Chains, and other such ideas). These in turn can be used to understand the newer generation of LLMs with good mathematical clarity.

For now - we will focus on this paper alone, and figure out how the correct program is arrived at.

First - the paper starts with a comparison of spell program and the proposed correct program. For context - the spell program just loops through a dictionary list of words - and prints unrecognized words in the input text. That is - it is a simple dictionary match on the given words. Whereas the proposed correct goes one step further by taking such a potentially malformed word, lists candidate fixes, and orders them by probability.

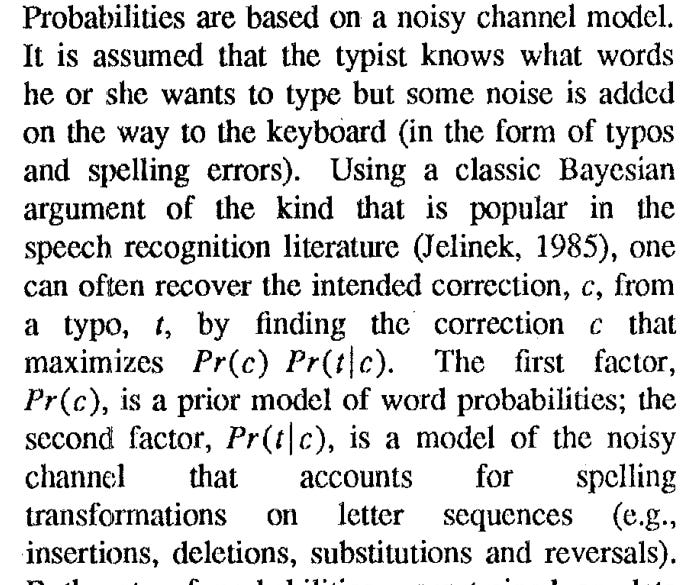

Right off the bat - we get into the mathematical principle of relevance. The authors introduce the idea of “noisy channel”. It’s just a fancy way of saying typo. The metaphor comes from the speech recognition literature - where noise messes up with recordings. Anyway - in simple terms this is how it works:

The author wants to type the correct version c

But due to some mistakes (add/delete/change) - the author types in t

So, the given input is t, but it has some mistake, since it’s not in the dictionary. We want to guess c from t. Bayes rules is helpful here. We want to find the word c such that the following is maximized:

The denominator P(t) is dropped here - because it’s common to all candidates, and so cancels out relatively speaking.

From existing statistical analysis of text - we already know what P( c ) for all words - how commonly does this word appear? For example, a word like “the” appears way more than an old English formulation “thee”. If it’s a more common word, it’s more likely to be the real target the author intended.

The P(t|c) part captures how likely it is to mistype c into t.

Examples:

If c = “their” → t = “thier” is a very common mistake → high probability.

If c = “their” → t = “x7qz!” is basically impossible → low probability.

For each candidate real word c:

If the word is common,

P(c)is high.If the typo is close to that word,

P(t|c)is high.

The model picks the c with the highest product.

So for “thier”:

P(their)is highP(”thier” | “their”)is high (common transposition)

Result: output “their”.

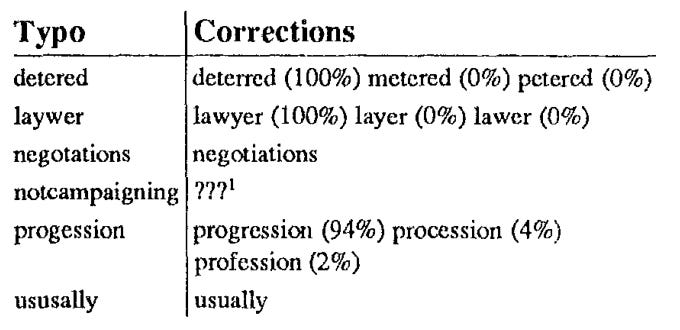

The correct command could output corrections something like this: